Prompt Engineering: What It Is and 15 Techniques for Effective AI Prompting + Tips

With Artificial Intelligence (AI) tools becoming more common across industries to supplement human effort, prompt engineering has emerged as a pivotal skill.

Prompt engineers are natural language experts responsible for communicating with large language models (LLMs) to achieve desired results.

In this article, we’ll explain the essentials of prompt engineering and its key concepts. We’ll also explore multiple techniques for developing prompts in a precise, context-driven manner.

To further help you understand, we’ll try these prompts with different AI models and see how they respond.

What Is Prompt Engineering?

Prompt engineering refers to creating precise and effective prompts to get context-driven AI outputs from large language models (LLMs). It requires expertise in natural language processing and LLM capabilities.

Prompt engineers have to frame questions and statements that are clear and context-specific, eliciting the most relevant and accurate responses from the AI model.

Whether for generating detailed marketing reports, creating engaging website content, or developing the perfect programming language code, effective prompt engineering is a time-saving skill that industry professionals should add to their portfolios.

Note that a prompt engineer doesn’t need to be familiar with programming languages or have software development skills. Anyone with good language skills and analytical thinking can learn prompt engineering.

Prompting Basics

Before we delve into advanced prompt techniques, let’s go over the basics of prompting to guide language models.

To begin with, prompting means using a natural language, such as English, to explain AI tools what you want and get a relevant response.

Interacting With Language Models Through Prompts

Large language models are generative AI tools that create human-like text based on the input they receive. These inputs are called prompts.

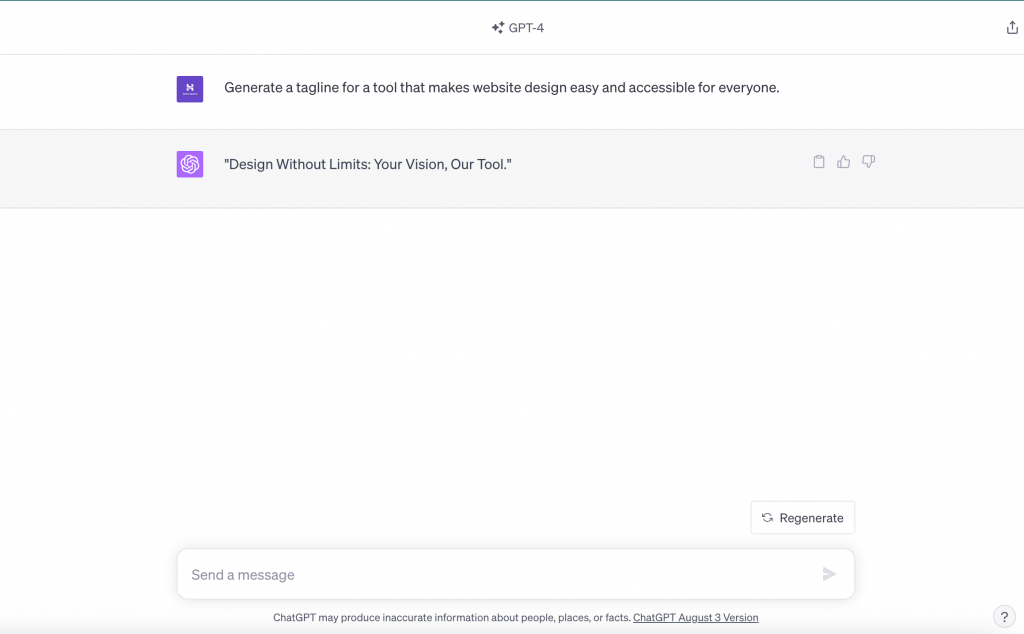

For instance, consider a web agency that wants to generate a tagline for a new website design tool they’ve developed. A well-engineered prompt, in this case, might look like this:

“Generate a tagline for a tool that makes website design easy and accessible for everyone.”

This prompt is clear and instructs the LLM on the specific type of content required – a catchy and concise tagline targeting potential users of a website design tool. The objective is to communicate your goals clearly to the LLM to receive an accurate and relevant output.

Let’s see the result this prompt gives when we feed it to ChatGPT-4:

Want to Learn Prompting?

Check out our learning guides on developing prompts:

AI Prompts for Web Agencies

Best AI Prompts for Web Designers

77 AI Prompts for Web Developers

The Power of Context and Instruction

To maximize the effectiveness of a language model, you must include two components in your prompts: context and instruction.

Adding context helps the model better understand the scenario, while instruction crafting tells it what you specifically want it to do.

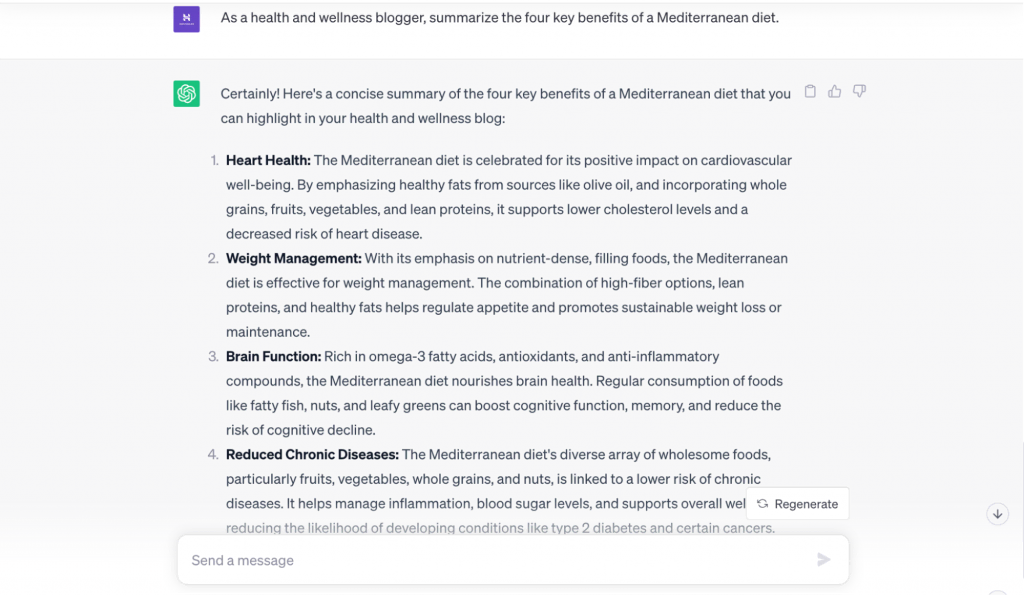

For example, consider the prompt: “As a health and wellness blogger, summarize the four key benefits of a Mediterranean diet.” In this case:

- Context – “As a health and wellness blogger” helps the model understand the perspective and depth from which to approach the summary.

- Instruction – “Summarize the four key benefits of a Mediterranean diet.” tells the model what it needs to focus on and produce as output.

Providing clear and sufficient context and instruction is crucial for several reasons:

- Less ambiguity – reduce the chance of receiving irrelevant or off-topic outputs.

- More control – get predictable responses from the AI.

- Save time – less back-and-forth communication is needed to achieve the desired result.

Here’s the result a context-driven prompt gives when we feed it to ChatGPT-4:

Prompt Elements

When crafting natural language prompts for AI chatbots, understanding the fundamental elements is crucial for achieving the desired output.

Prompt elements guide the AI model in your intended direction, ensuring clarity and context.

Here are some primary components to consider:

Context Setting

Correctly setting the stage can make all the difference in getting the desired result.

Importance of Context

Context gives the AI a clear background or surrounding information that allows it to generate relevant and appropriate content. Without proper context, responses may end up being generic or off-target.

For example, if you ask the model about “Green technologies”, introducing the context of “used in transportation” narrows down the response to green technologies related to vehicles.

Introducing Context

A good prompt is not just about having context but introducing it effectively. The more detailed and specific the context, the better the AI’s understanding will be.

For example, instead of “Recommendations for eCommerce”, specify “Recommendations for improving an eCommerce website’s user experience.”

Clear Instructions

Clear instructions drive contextual AI interactions.

Crafting Precise Instructions

Being explicit and direct with your instructions ensures more precision in AI outputs and leaves less room for ambiguity.

To illustrate, instead of prompting “Tell me about website developers”, specify to “Explain the roles and responsibilities of a website developer.”

Avoiding Vague Language

Ambiguity or contradictory language can confuse the model, leading to less accurate responses.

For example, “Discuss website design” is vague. Instead, “Discuss the trends in website design for eCommerce platforms” is more direct.

Desired Output Format

Specifying the output format is crucial when developing prompts. It helps large language models with enhanced content creation.

Formatting

Dictating the structure or style of the AI’s response can refine outputs to the desired shape or form.

For example – requesting “List the top five tools for website developers” ensures a concise list format.

Tone and Length

Establishing tone helps infuse a natural language emotion into the AI’s answers. Meanwhile, defining the length allows the AI models to determine their response’s depth.

Setting the Tone

You can govern an answer’s emotional resonance or professional grade by indicating the desired tone.

For instance, “Provide a professional critique on the following website design.”

Length Dictates Depth

Indicating whether you need a concise summary or an elaborate explanation ensures tailored AI responses.

For example, “Write a short introduction (30-40 words) about the evolution of responsive website design.”

Prompt Engineering Techniques

Let’s now delve deeper into some foundational prompt engineering techniques and strategies to help you master model prompting.

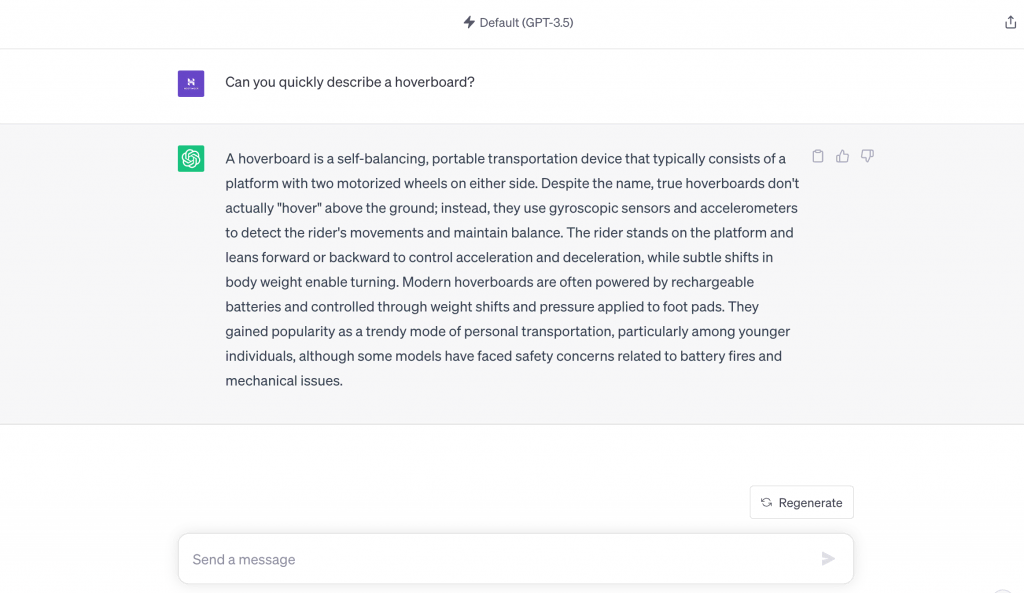

1. Zero-Shot Prompting

Zero-shot prompting involves generating a response without feeding the large language models any examples or prior context. This technique is ideal when you need quick answers to basic questions or general topics.

Here’s an example of zero-shot prompting with ChatGPT-3.5:

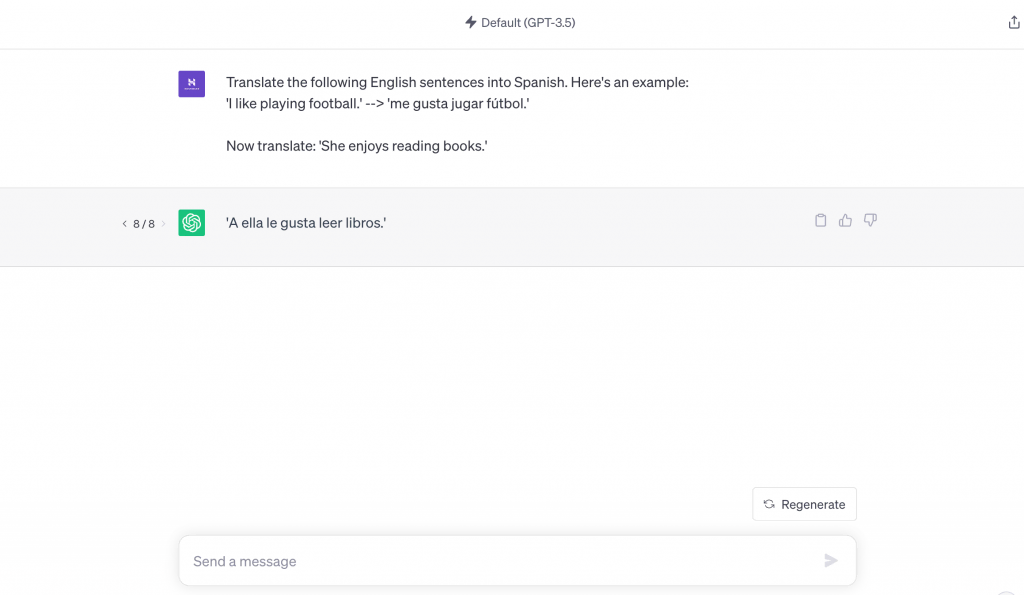

2. One-Shot Prompting

One-shot prompting is about extracting a response based on one example or piece of context provided by the user.

To illustrate, we tried this strategy with ChatGPT-3.5:

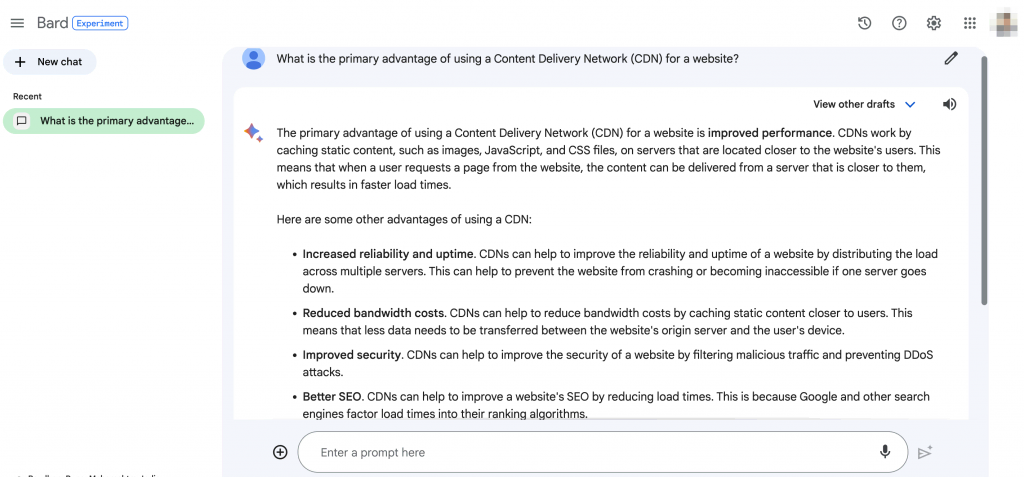

3. Information Retrieval

Information retrieval prompting is when you treat large language models as search engines. It involves asking the generative AI a highly specific question for more detailed answers.

Some LLMs are better at information retrieval prompts due to their data sources. For example, Google’s BARD can access current internet information, while ChatGPT only knows what happened before September 2021.

Here’s an example of information retrieval prompting with BARD:

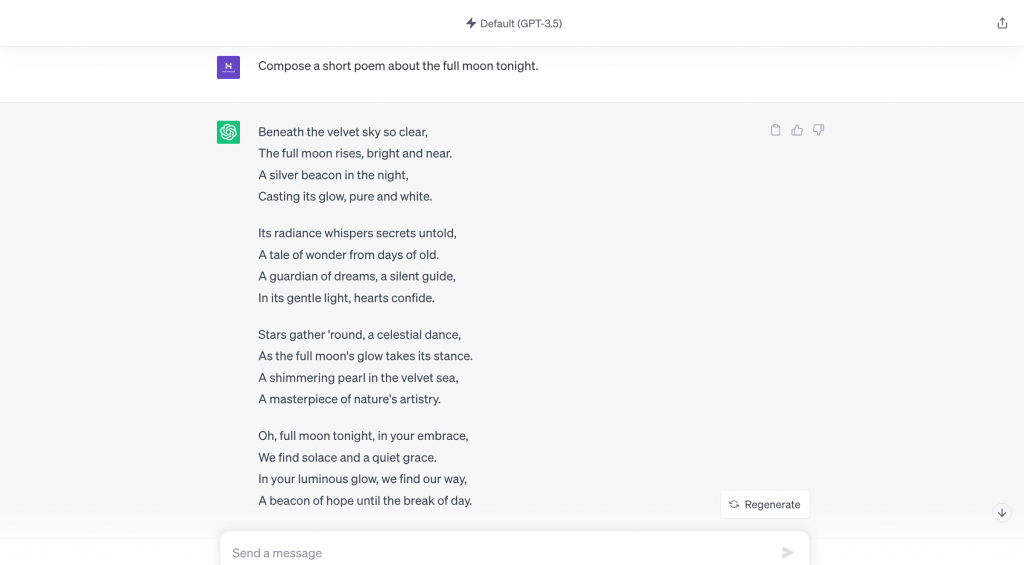

4. Creative Writing

Crafting creative content prompts can help you generate imaginative narratives, captivating stories, and unique textual expressions tailored to your audience’s preferences and interests.

Here’s an example of a prompt that asks the GPT-3.5 generative AI to write creatively:

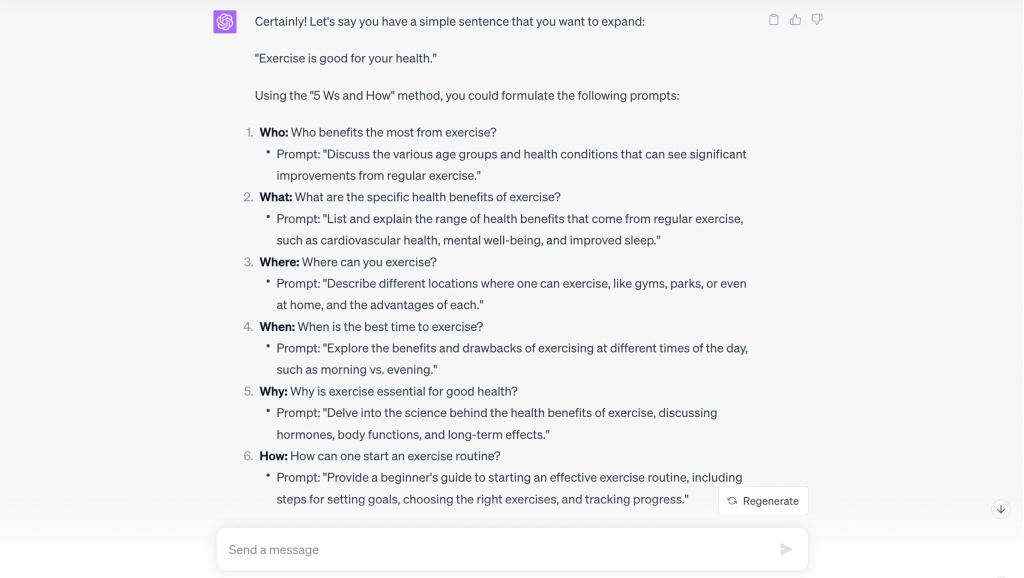

5. Context Expansion

The context expansion technique revolves around enriching the information given to the AI to effectively enhance its understanding.

A good way to write context expansion prompts is through the 5 “Ws and How” method, which involves expanding the query by asking Who, What, Where, When, Why, and How questions related to the subject matter.

Here’s how ChatGPT-4 used the method to expand the context of a simple statement: “Exercise is good for your health”.

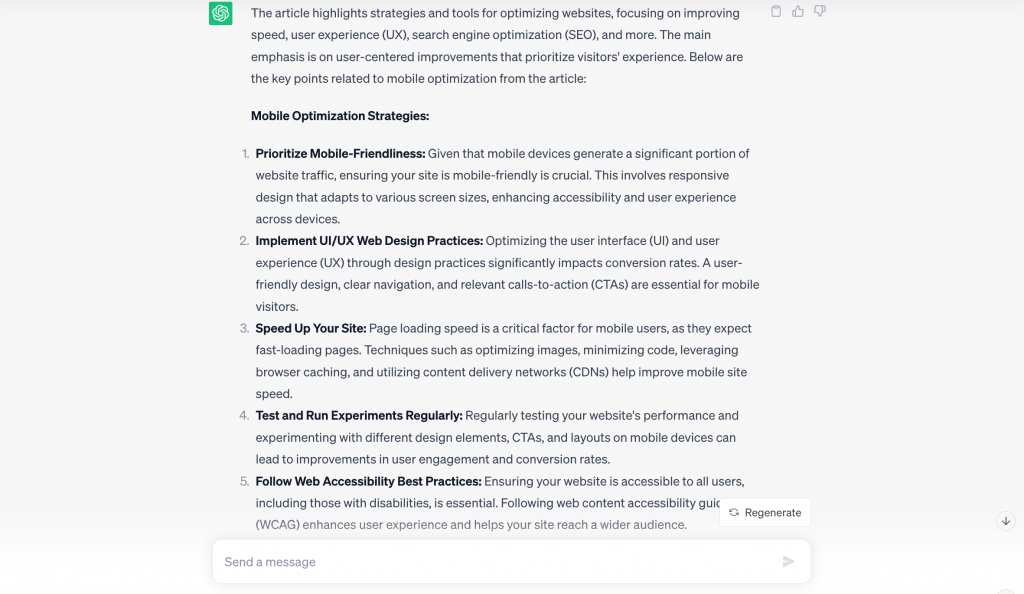

6. Content Summarization With Specific Focus

Effective prompt engineering is also about directing the AI’s attention to specific parts of the input, especially when you want concise summaries with a particular emphasis.

By crafting precise instructions, you can highlight which elements of the text you want the model to prioritize, ensuring the summary captures the essence of the desired focus.

Suppose you have a comprehensive guide on website optimization techniques, but you’re only interested in mobile optimization strategies. In this case, your prompt should clearly indicate it.

For example, “Summarize this article on website optimization, but only focus on strategies related to mobile optimization: [copy+paste article here].”

Here’s how ChatGPT-4 did the task:

Want to Optimize Your Website With AI?

Check out our article on AI for Websites: How Artificial Intelligence Can Help Improve Your Website.

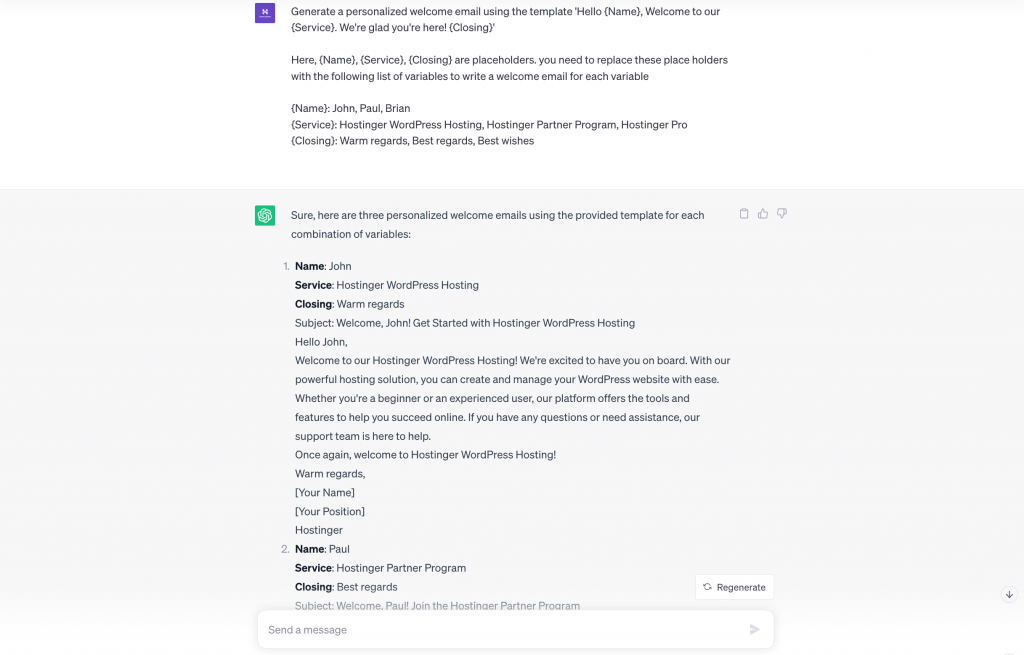

7. Template Filling

Template filling lets you create versatile yet structured content effortlessly. You will use a template with placeholders to enable prompt customization for different situations or inputs while maintaining a consistent format.

Customizing Templates With Variables and Placeholders

In template filling, you can further customize the output of the generative AI tools by defining multiple variables for the placeholders. Typically, content managers or web developers use this strategy to create multiple customized AI-generated content snippets for their websites.

Suppose you’re managing an eCommerce website. In that case, you might use a standard template for product descriptions, allowing the AI to populate fields like product name, features, and price to create a new description with each answer.

This technique is incredibly beneficial when you need AI-generated content to meet particular guidelines or formats.

Another practical use case is the creation of automated but personalized emails. Dynamic template filling guarantees that the overall email structure is consistent, while specific content varies based on user information.

As an example, let’s take this prompt: “Generate a personalized welcome email using the template ‘Hello {Name}, Welcome to our {Service}. We’re glad you’re here! {Closing}'”

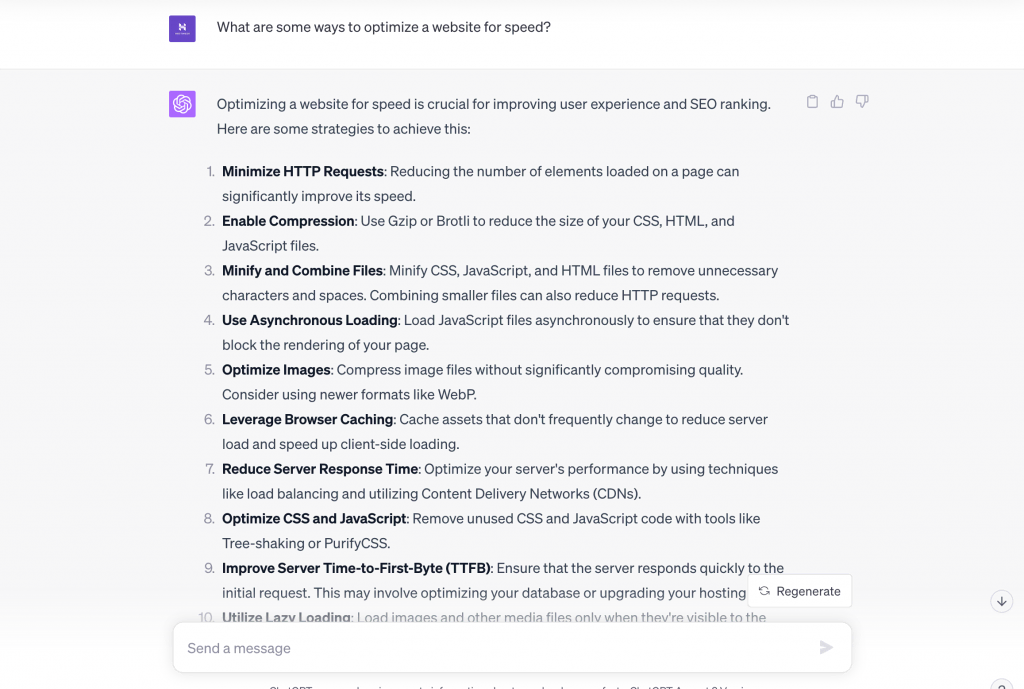

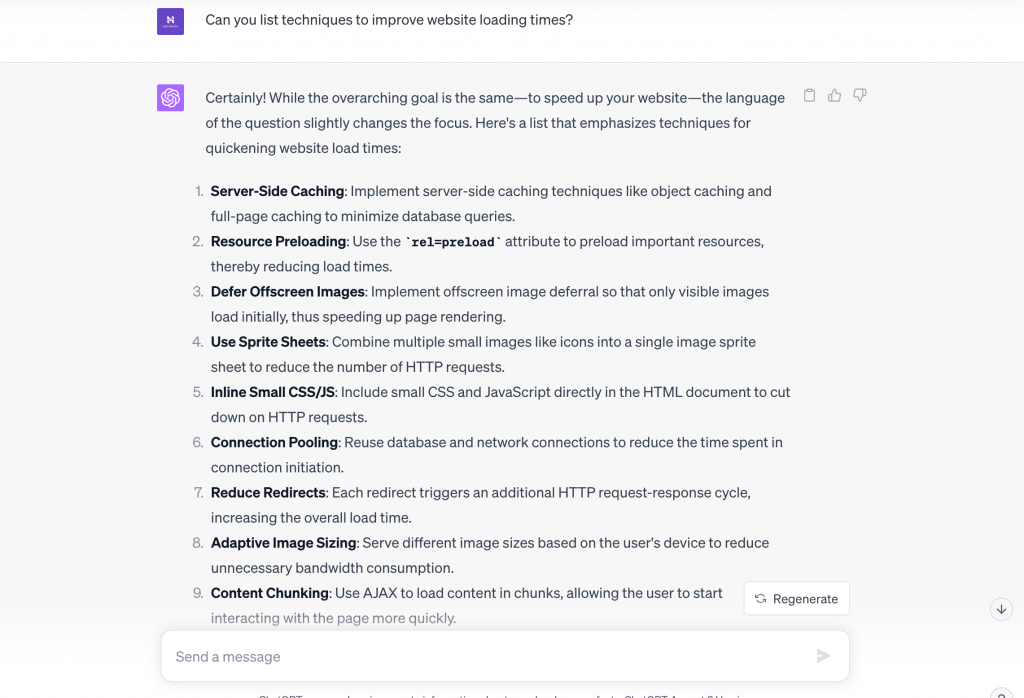

8. Prompt Reframing

Prompt reframing or AI prompt customization lets you subtly change your prompts’ wording while maintaining the query’s original intent.

It can encourage the language model to produce a variety of responses that answer the original intent in different ways.

Wording Techniques to Maintain Intent

One approach is to use synonyms or rephrase questions while keeping the main subject consistent. It will yield different nuances in the responses, which can be particularly useful when you’re looking for various ideas.

Example

Original Prompt – What are some ways to optimize a website for speed?

Reframed Prompt – Can you list techniques to improve website loading times?

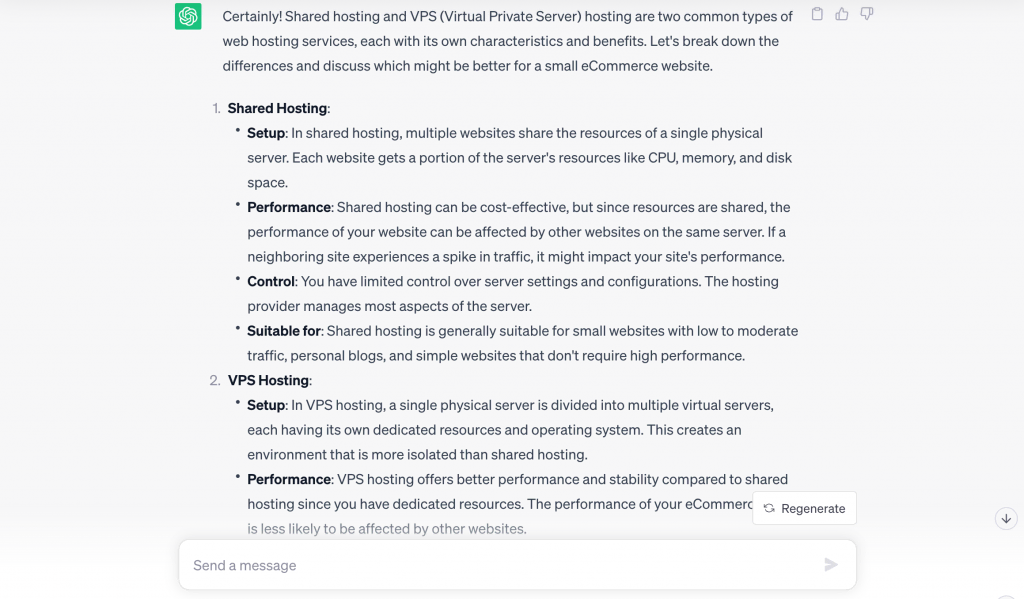

9. Prompt Combination

The prompt combination technique involves merging different instructions or questions into a single, multi-faceted prompt to elicit a comprehensive answer from the AI.

For this tutorial, let’s take this prompt as an example – “Can you explain the differences between shared hosting and VPS hosting and recommend which is better for a small eCommerce website?”

10. Chain-Of-Thought Prompting

Chain-of-thought prompting is about using real-time AI interactions to guide the tool toward more accurate and comprehensive answers.

Instead of relying solely on a single prompt, you can provide a sequence of related examples or questions to refine the original query.

How to Use Chain-of-Thought Prompting

The key to effective chain-of-thought prompting is to break down a complex question or topic into smaller, more manageable parts. Then, present these parts as a sequence of prompts that build upon each other, nudging the AI towards the desired answer.

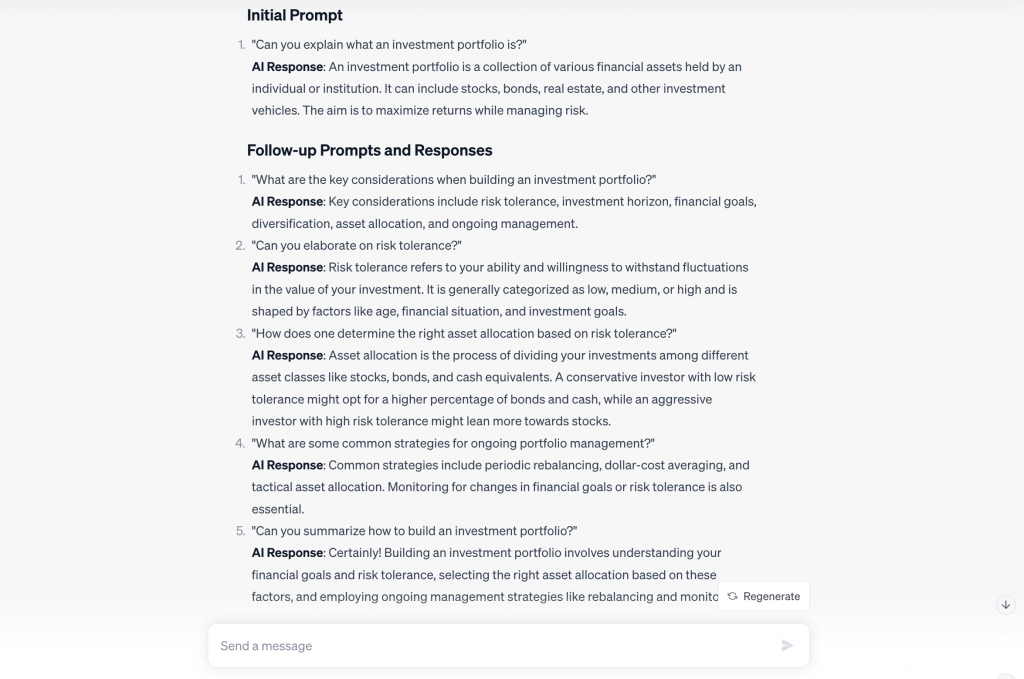

We asked ChatGPT-4 to provide us with an example of chain-of-thought-prompting, and here’s what it came up with:

11. Iterative Prompting

Iterative prompting is another effective technique where you build upon previous responses by asking follow-up questions. With it, you can dive deeper into a topic, extract additional insights, or clarify any ambiguities from the initial output.

How to Use Iterative Prompting

The key to iterative prompting is being attentive to the AI’s initial answer. You can frame your follow-up prompt to elaborate on a specific part of that answer, dig into a subtopic, or seek clarification. It is especially useful when you need to gather detailed information.

LLMs that are more adept at natural language processing (NLP) can expertly handle iterative prompts and craft their responses in a human-like manner.

Here’s a quick example of iterative prompting using Claude AI, an LLM with good NLP capabilities:

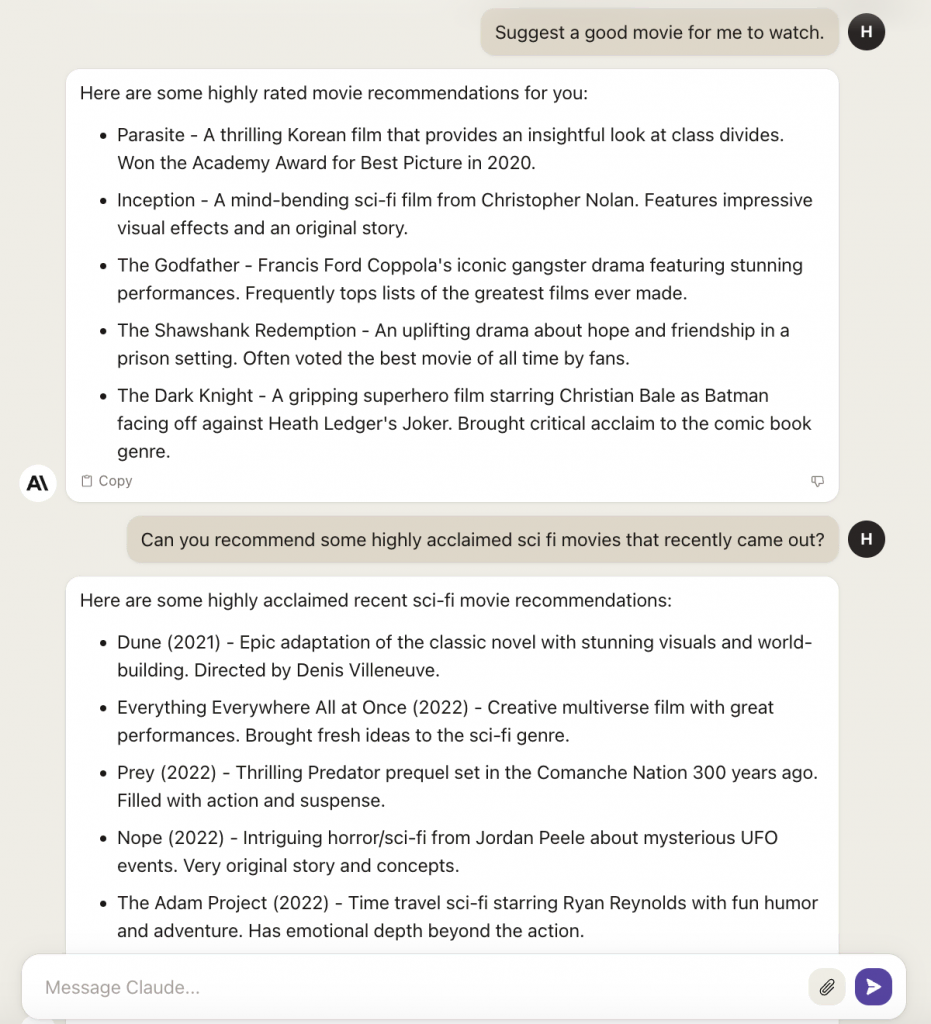

12. Interactive Storytelling and Role-Playing

Interactive storytelling and role-playing with AI is a creative and engaging way to produce dynamic content.

This technique takes advantage of the AI’s ability to adapt its responses based on the prompts and previous interactions, allowing for a fluid and evolving narrative.

Collaborative Storytelling

Collaborative storytelling goes a step further by letting the AI adapt to your inputs in real time. It allows for a back-and-forth exchange where you and the tool contribute to the unfolding story, creating an interactive and immersive experience.

Example

Prompt – I want to start a collaborative storytelling exercise with you. We’ll be writing a fantasy story about a land where magic exists but has been forgotten for a long time.

Here’s the main character and the end goal of the story:

Character: Doraleous, a young farmer who accidentally discovers an ancient spellbook.

Goal: To harness the power of magic to end a famine that has hit their forest hamlet.

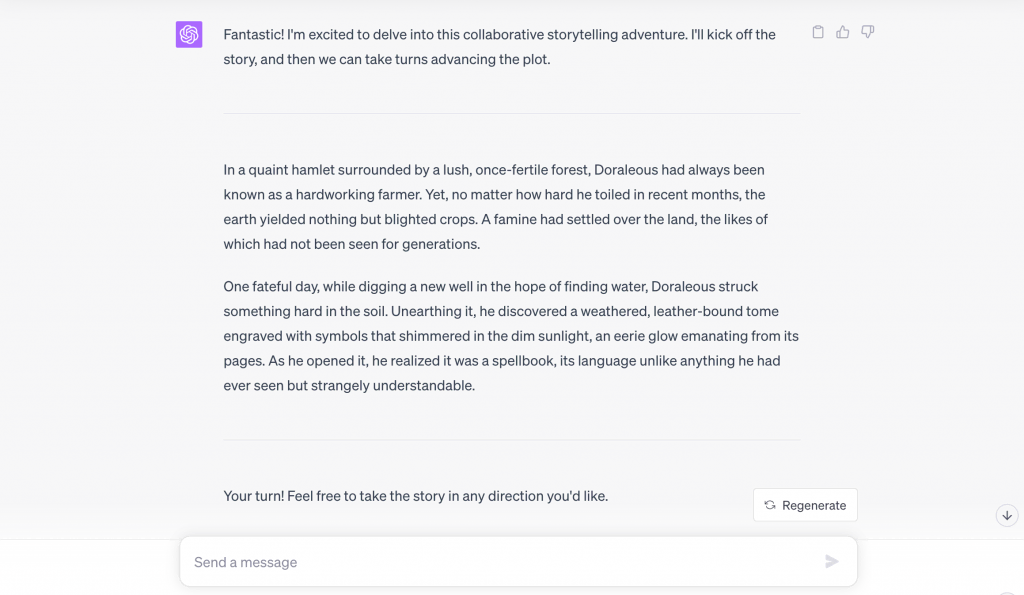

13. Implicit Information Injection

With Implicit Information Injection, you infuse context subtly so the AI model understands your needs without being explicitly told.

Strategies for Implicit Context

This technique can be particularly useful when you’re seeking nuanced or tailored responses. For example, instead of saying, “Write this in a formal tone,” you could use words like “elaborate” or “detailed” to guide the AI towards a more formal output.

Example

Prompt – Can you give some quick tips for boosting website traffic?

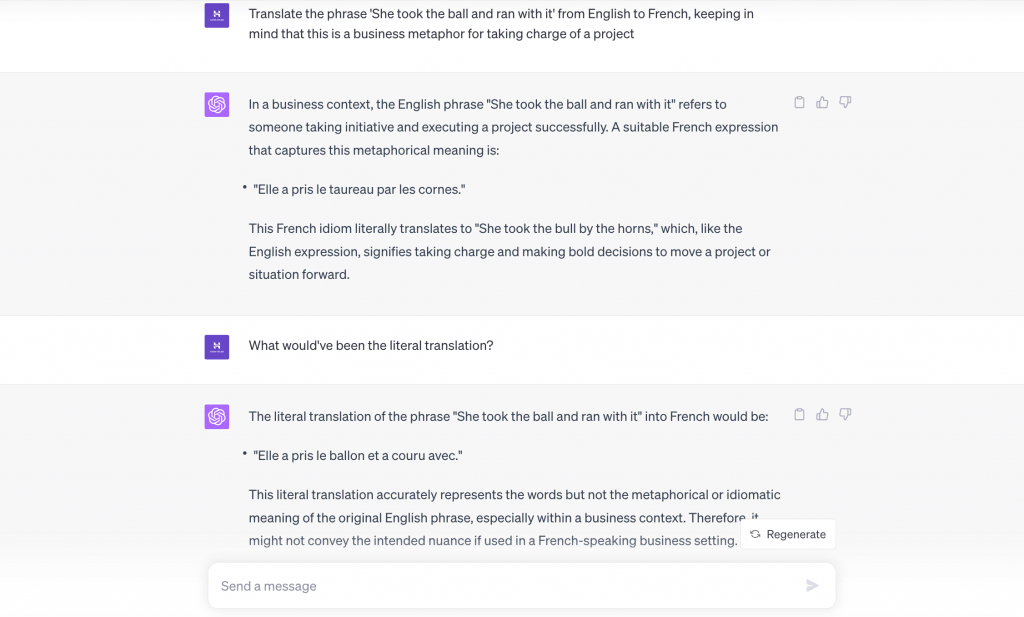

14. Language Translation With Contextual Nuance

Multilingual content generation is not just about converting words from one language to another. Using prompt engineering, you can ensure that the AI considers the cultural or situational context, resulting in a more accurate and nuanced translation.

Improving Translation Accuracy With Context

By adding cultural or situational hints within the prompt, you can guide the AI to provide a translation that fits the context. It is particularly useful in business communications, legal documents, or any text where a nuance can drastically alter the meaning.

Example

Prompt – Translate the phrase ‘She took the ball and ran with it’ from English to French, keeping in mind that this is a business metaphor for taking charge of a project.

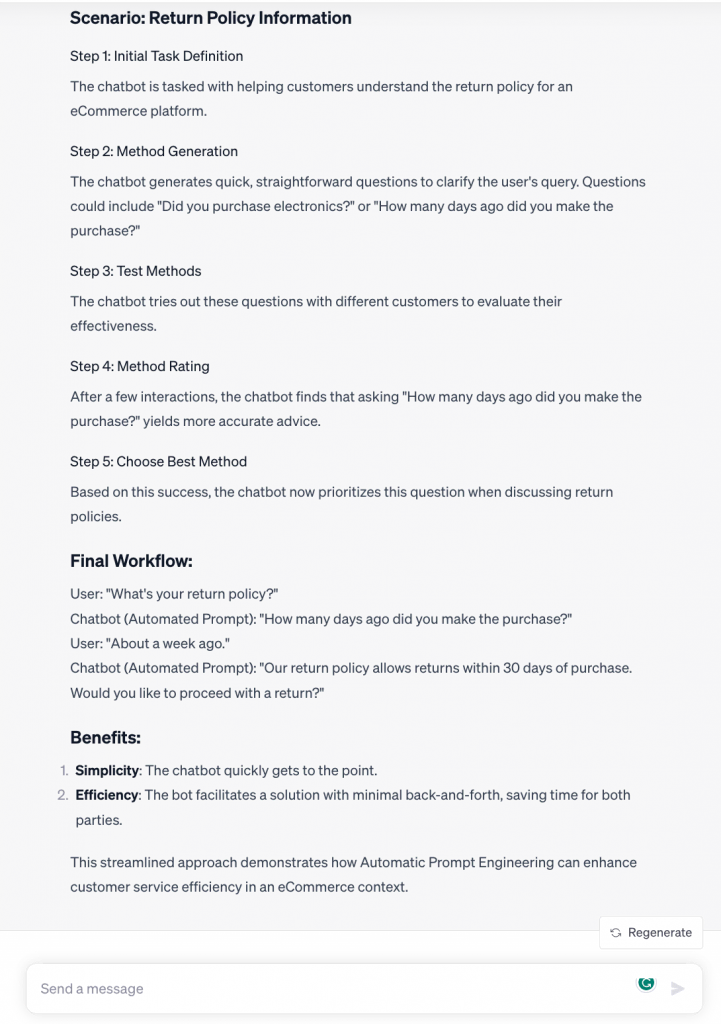

15. Automatic Prompt Engineer

Automatic Prompt Engineering (APE) is an advancement in the field of artificial intelligence that leverages new LLM capabilities to help the AI automatically generate and select instructions for itself.

It transforms the task into a black-box optimization problem, using machine learning algorithms to generate and evaluate candidate solutions heuristically.

An AI agent uses a similar method to break tasks and generate follow-up prompts automatically, completing given jobs with minimal human interaction. To learn more about the tool, check our guide on installing Auto-GPT.

APE Workflow Explained

The APE workflow consists of five main steps:

- You give the chatbot a specific job to do and show some examples.

- The chatbot comes up with different ways to do the job, either by straightforward reasoning or by considering similar tasks it knows about.

- These different methods are then tested in practice.

- The chatbot rates how well each method worked.

- The AI will then choose a better method and apply it.

Benefits and Applications in Machine Learning

When equipped with self-prompting capabilities, generative AI tools can streamline tasks from context-driven data analysis to automated customer service, eliminating the need for constant human-generated prompts.

We asked ChatGPT-4 to explain the APE workflow with a simple example:

Prompt Engineering Use Cases

Let’s explore how you can employ these techniques in various practical scenarios ranging from code development and SEO-friendly content generation to medical diagnosis.

Generating and Debugging Code

Prompt engineering can be highly useful for code generation and debugging. You can craft specific prompts to get AI to produce code snippets, debug existing code, or even suggest alternative coding methods.

For instance, you might use a prompt like “Generate a Python function to calculate the factorial of a number” to get functional code.

Producing SEO-Friendly Articles

Besides asking AI to create an article from scratch, you can use it to improve your current ones. One of the most common AI content optimization practices is copying the whole blog post and certain keywords you want the tool to add naturally.

Customizing Virtual Assistants

With the right prompts, you can tailor virtual assistants to deliver targeted audience communication on your website. This is especially important for businesses that aim to deliver a personalized audience engagement experience.

By using prompt engineering techniques, you can create interactions that are not only functional but also customized to each user’s preferences and history.

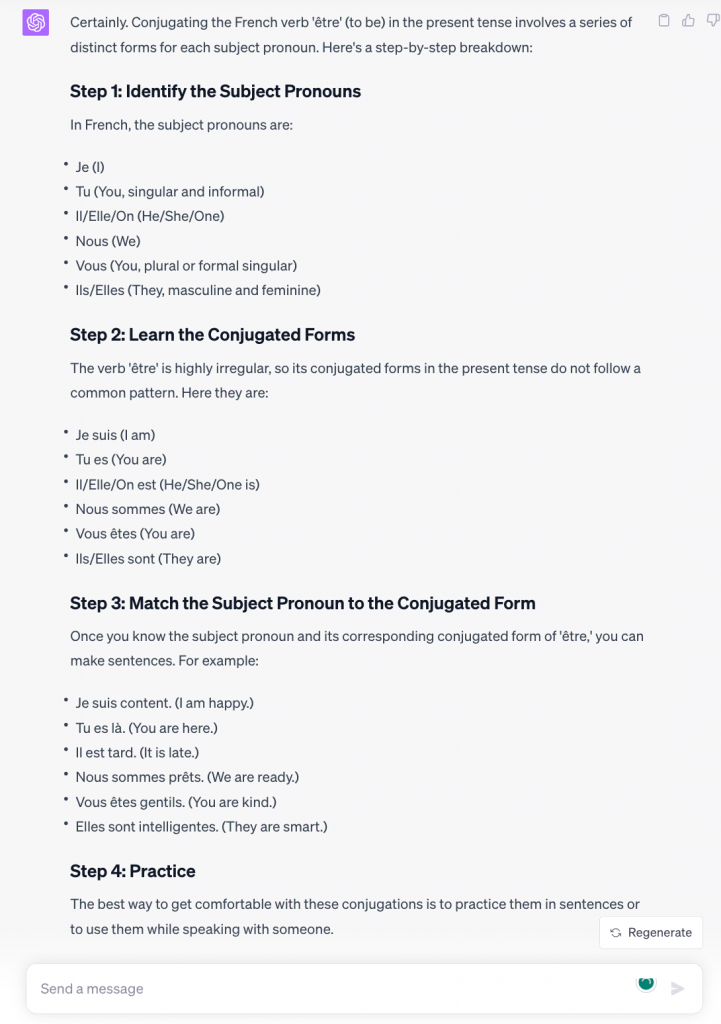

Learning and Education Support

Well-crafted prompts can significantly help with education and learning. Using the discussed techniques, you can become your own prompt engineer and use AI models to better understand complex theories such as derivatives, integration, and grammar.

Example

Prompt – Can you explain how to conjugate the French verb ‘être’ in the present tense, step-by-step?

Analyzing and Interpreting Data

Prompt engineering can help extract useful insights from complex data sets. After giving access to large data sources, you can ask LLMs to analyze the information and generate visual summaries through multimodal AI prompting.

You can craft prompts to get the AI scan for trends, patterns, and relationships in the data that may not be immediately apparent.

For example, as a prompt engineer tasked to analyze and interpret a vast dataset, you can prompt an AI image generator to create charts or graphs to showcase important trends and correlations in the data.

You can then use iterative prompting and ask the AI to interpret the visualization and describe meaningful narratives, insights, and conclusions that can be drawn.

Asking targeted questions through information retrieval prompting can further help you guide the AI to identify significant findings and articulate the “so what?” from the data.

You can then use content summarization to distill complex information into key takeaways.

Researching and Medical Diagnosing

In medical research and diagnosis, prompt engineering can be a game-changer. Through meticulously designed prompts and APE, AI applications can help diagnosticians analyze symptoms, sift through medical literature, and even propose potential treatments.

For instance, a prompt could guide the AI to scan through numerous research papers to identify possible treatments for a rare condition.

However, discussing your symptoms with AI isn’t an alternative to a doctor’s consultation. Remember that AI is just a tool that cannot be fully trusted.

Tips for Successful Prompt Engineering

Effective prompt engineering is about understanding techniques and applying them well. Here are some tips to refine your approach.

Analyze Model Responses

Studying how the model reacts to various prompts is important. This understanding will help you improve your prompts to achieve more accurate and complete responses, especially when working with generative AI tools.

Leverage User Feedback

A highly effective way to improve prompt engineering is to actively give AI applications feedback on how well they answer your prompt.

Feedback integration is one of the most important LLM capabilities that helps generative AI tools with language model refinement. It essentially makes them better equipped at giving you exactly what you want.

Adapt to Model Updates

It is crucial to stay on top of model architecture changes, added data, and new LLM capabilities. This helps you adjust your prompts and better leverage new features.

Collaborate and Community Input

Working with others offers fresh perspectives on prompt engineering. You can ask for community input in forums, social media, and professional networks to improve your prompt crafting skills.

Experiment With Different Prompt Strategies

Trying out different techniques gives you a deeper understanding of what works best. This will make you more flexible in applying prompts to various use cases and contexts.

Future Trends in Prompt Engineering

Integration With Augmented Reality (AR) and Virtual Reality (VR)

Prompt engineering can enhance immersive AR/VR experiences by optimizing AI-driven interactions in 3D environments. Advancements in prompt engineering can enable users to converse with AI characters, request information, and issue commands using natural language in real-time simulated settings.

Prompt engineers can give AI spatial, situational, and conversational context and nurture remarkably human-like exchanges in gaming, training, tourism, and other AR/VR applications.

The prompts can also account for the user’s position, actions, and environment to enable highly relevant responses.

Cross-Domain Creativity

Pushing the boundaries of prompt engineering may inspire AI to generate novel art, music, stories, and other creative works. Prompt engineers can guide AI to blend concepts across different mediums and genres or combine human and machine creativity into collaborative works.

Prompt engineering can continually explore new applications of AI creativity while addressing ethical concerns. If thoughtfully implemented, it could democratize access to artistic AI tools.

Real-Time Language Translation and Communication

Prompt engineering may enable instantaneous translation of spoken and written languages. Prompts could provide context across multiple languages so AI can translate bidirectionally in real time while retaining nuance.

This may enable seamless multilingual communication in business, diplomatic, and personal contexts. Careful prompt engineering is needed to account for regional dialects, cultural nuances, and speech patterns.

Conclusion

Prompt engineering is an emerging discipline within computer science that can revolutionize how we interact with technology. Mastering this skill is essential for harnessing the full spectrum of LLM functionality, from condensing intricate data to performing nuanced language translations.

Although certain language models excel at specific types of prompts, a well-designed query can substantially enhance the response quality from any generative AI tool.

Becoming proficient in prompt engineering involves adopting an iterative mindset. This entails ongoing testing, gathering user insights, tapping into community wisdom, and adapting to new LLM capabilities.

Effectively developing prompts begins with the foundational techniques. Start experimenting with information retrieval, context amplification, summarization, reframing, and iterative prompting to get familiar with the language models.

If you know of any other prompting strategies, please share them in the comments below. Your contributions will enrich the collective knowledge of prompt engineers who read this piece.

Prompt Engineering FAQ

Let’s address some commonly asked questions about prompt engineering.

What Are the Benefits of Using Prompt Engineering?

Prompt engineering enhances the performance of large language models, making them more efficient and versatile at generating useful outputs. It’s vital for tasks such as information retrieval, data analysis, AI content enhancement, and tailored language generation.

How Difficult Is Prompt Engineering?

Prompt engineering is a relatively easy skill to learn. However, we recommend that beginners start with simple queries and then move on to more complex concepts that require a nuanced understanding of natural language processing and effective language modeling to craft optimized data queries.

Can You Get a Job Doing Prompt Engineering?

Yes, as AI and machine learning grow, the demand for specialized roles like prompt engineers is increasing. Many tech companies and research institutions are creating roles that involve responsibilities such as developing prompts as part of data science teams.

What Does a Prompt Engineer Do?

A prompt engineer specializes in crafting queries or instructions to guide large language models and AI systems. They focus on optimizing interactions between the user and the AI, ensuring more accurate and meaningful outputs across various applications, from data analysis to customer engagement.